The modern Know Your Customer (KYC) systems leverage advanced technologies to provide remote onboarding for customers. In the process, a user typically presents an identification document and then takes a selfie. Sophisticated algorithms then cross-verify the photo on the identification document with the selfie to authenticate the user’s identity. This method has streamlined the KYC process, enabling companies to onboard customers remotely without sacrificing the integrity and security of their systems.

However, presentation attacks are a common way for fraudsters to manipulate this process. These attacks involve presenting a counterfeit or manipulated object or media to a biometric sensor. In the case of remote KYC systems, this might involve using a photo or video in place of a live selfie. This type of fraud has been widely exploited, prompting the development of new standards such as ISO/IEC 30107. This standard provides methods for testing the ability of biometric technology to resist presentation attacks, and even provides certification with levels 1-2 from organizations like iBeta Quality Assurance Lab. Such certification validates that a particular Presentation Attack Detection (PAD) solution provides the necessary level of protection against presentation attacks.

Despite these protective measures, the digital identity industry now faces a new type of attack called an injection attack. In this scenario, an image or video isn’t presented to the camera but is instead injected using a virtual camera, a hardware USB stick, or even a JavaScript code that hijacks the video stream from the camera. The following picture demonstrates how an external USB capturer could be used for injection.

To the operating system, a standard USB capturer appears as a regular USB camera. However, the video stream doesn’t originate from a camera lens but from the display of a Source laptop, reflected and captured by the USB device. This can be achieved with a simple display extension function activated by plugging a USB-C cable into a laptop. By opening any photo/video on the screen of the Source laptop, that one will stream as a video supposedly coming from the USB camera. Both the operating system and the application running on the Sink laptop can’t recognize that the video stream isn’t coming from a camera lens, making this a low-cost yet effective trick for fraudsters.

How can this type of injection be detected and prevented?

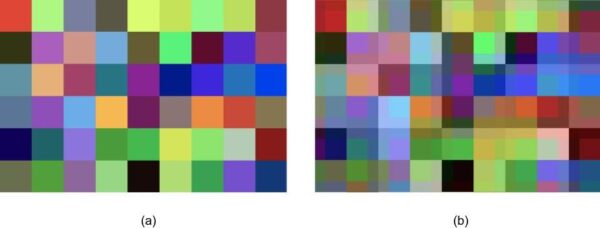

This is where Artificial Intelligence (AI) steps in. Interestingly, the video stream passed from the display and through the USB capturer is double-recompressed. Firstly, this occurs when the original video signal is archived on the hard drive of the Source Laptop. Subsequently, despite the high resolution of the original video format, it garners artifacts due to recompression when transferred through the USB Capturer. These artifacts are a byproduct of the compression algorithms that strive to minimize the size of the video data or convert it from one format to another, often resulting in a loss of image details. These alterations can manifest as blurry or blocky images, color banding, or smearing effects.

Figure. Scaled a small area of the original image (a) and recompressed (b).

These artifacts are typically invisible to the human eye but can be detected by AI specifically trained for this purpose. Just as a neural network can be trained to recognize objects, faces, and presentation attacks, it can also distinguish between images sourced from an actual camera and those injected through a USB capturer or virtual camera. These injection methods typically involve more complex processes and therefore result in more loss during video signal transmission, providing a basis for detection.

Training an artificial neural network to perform this task typically requires a significant volume of samples. For instance, 10,000 to 100,000 samples might be enough to train a Minimum Viable Product (MVP) of a neural network based on convolutional layers. For a more accurate and robust detector, between 100,000 to 1 million samples might be needed. For commercial applications demanding high accuracy, anywhere from 1 million samples could be required.

By investing in such AI solutions, digital identity verification processes can better combat the rising threat of injection attacks, enhancing the overall security of remote onboarding and other digital identification processes.