Biometric firm’s voice anti-spoofing earns top spot

New York, NY – September 24, 2019 — Today, ID R&D, the award-winning biometrics authentication leader and technology provider offering AI-based voice, face, and behavioral biometrics and voice and face anti-spoofing products, announces its voice liveness detection technology achieved the highest accuracy evaluation in the 2019 Automatic Speaker Verification Spoofing and Countermeasures Challenge (ASVspoof Challenge) at Logical Access Condition (*1). Logical Access Condition refers to voice spoofing attacks generated using the latest text-to-speech (TTS) synthesis and voice conversion (VC) technologies.

Synthetic and computer-generated speech threatens to usher in a future where users are unable to determine whether a voice they hear on television, on the internet, or over the phone is authentic. Already, fraudsters can replicate a user’s voice with less than a minute of voice samples. As it advances, spoofed speech has the potential to wholly undermine business, personal, and governmental operations and there is an urgent need for wide-scale adoption of countermeasures that serve to detect and prevent such unauthorized access attempts.

In 2013, concerned biometrics authentication industry leaders combined efforts and expertise to combat the growing threat of spoofed speech and launched the biannual ASVspoof Challenge initiative. The initiative, the largest and most comprehensive evaluation of spoofing, aims to promote the development of effective countermeasures that can distinguish between genuine and spoofed speech. The 2019 ASVspoof Challenge included 63 research teams from academic and commercial organizations. Each team submitted a technological solution designed to detect synthetic, recorded, or converted speech, and each team agreed to be evaluated on the accuracy of its solution.

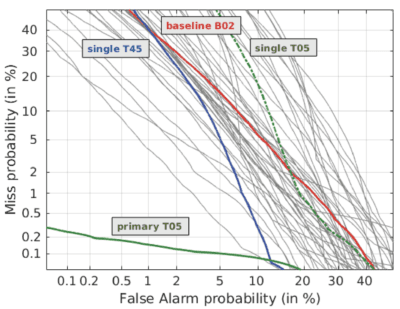

ID R&D’s anti-spoofing voice technology returned the lowest Equal Error Rate (EER), a metric that measures a solution’s accuracy in distinguishing genuine speech from spoofed speech. The EER is the point at which the two types of errors, falsely accepting a spoof as real and falsely labeling a real voice as a spoof, are equal. With an EER of .22%, ID R&D, or Team T05 in the green line in accompanying Graph A, scored best on an evaluation dataset.

Graph A:

ID R&D is a global biometric authentication leader. Beyond its voice authentication and voice anti-spoofing solutions, it also offers facial and behavioral biometric authentication and passive facial anti-spoofing technology that uses only a single image. The biometric authentication leader’s customers include companies in banking and financial services, insurance, telecom, and healthcare in mobile, web, call center, and IoT applications.

“As the number of crimes using synthetic or recorded voice increases, ID R&D is committed to delivering solutions that prevent even the most advanced spoofing attacks,” said Konstantin Simonchik, Chief Scientific Officer at ID R&D. “We are proud to have earned our top spot in the ASVspoof challenge and to contribute toward creating a future where interaction between users and technology is not just simple, but also secure.”

About ID R&D

ID R&D is an award-winning provider of multi-modal biometric security solutions based in New York, NY. With more than 25 years of experience in biometrics, the company’s management and development teams apply the latest scientific breakthroughs to significantly enhance authentication experiences. ID R&D combines a science-driven seamless authentication experience with the capabilities of a leading research and development team. ID R&D’s focus is on behavioral biometrics, voice biometrics, voice and face anti-spoofing, keystroke dynamics, and biometric fusion. Learn more at www.idrnd.ai

Additional resources:

ID R&D website: www.idrnd.ai

ASVspoof 2019 Challenge website: www.asvspoof.org/

ASVspoof 2019 results: www.isca-speech.org/archive/Interspeech_2019/pdfs/2249.pdf

Note:

(*1) ASVspoof 2019: Future Horizons in Spoofed and Fake Audio Detection

https://www.isca-speech.org/archive/Interspeech_2019/pdfs/2249.pdf

Contact:

Cindy Clement

cindy@clementpeterson.com